Data Preprocessing is a very crucial step in every Machine learning model creation because the independent and dependent features should be as linearly aligned as possible i.e. the independent features should be separated as such that proper association can be made with the target feature such that the model accuracy gets increased. By Data Preprocessing we mean scaling the data, changing the categorical values to numerical ones, normalizing the data, etc. Today we will be discussing encoding the categorical variables into numeric ones using encoding techniques and will learn the differences between the different encodings. The programming language that we are taking here for reference is Python. Different encoding techniques that are present for preprocessing the data are One Hot Encoding and Label Encoding. Let us understand these two, one by one and try to learn the difference between the two:

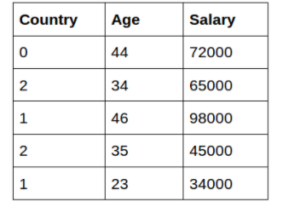

Label Encoding

This is a data preprocessing technique where we try to convert the categorical column data type to numerical (from string to numeric). This is done because our machine learning model doesn’t understand string characters and therefore there should be a provision to encode them in a machine-understandable format. This is achieved with the Label Encoding method. In the Label Encoding method, the categories present under the categorical features are converted in a manner that is associated with hierarchical separation. This means that if we have categorical features where the categorical variables are linked with each other in terms of hierarchy then we should encode these features using Label Encoding. If Label Encoding is performed on non-hierarchical features then the accuracy of the model gets badly affected and hence it is not a good choice for non-hierarchical features.

Implementation

import pandas as pd import numpy as np df=pd.read_csv("Salary.csv")from sklearn.preprocessing import LabelEncoder label_encoder = LabelEncoder() df['Country']= label_encoder.fit_transform(df[‘Country'])

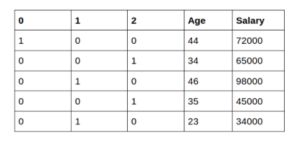

One Hot Encoding

This is also an encoding technique in the field of Machine Learning where we try to convert the categorical string variables to numeric ones. The way it converts these features to numeric is very interesting. It creates dummy variables in the data which corresponds to the categorical variables. This means that each categorical feature is assigned a dummy column. The dummy columns are nothing but One Hot Vector in the n-dimensional space. This type of encoding technique is best suited for non-hierarchical features where there is no link of one variable with others. We can say that it is opposite to Label Encoder in the way it works. But, there is a drawback of One Hot Encoding which is also called Dummy Variable Trap. This means that the variables are highly correlated to each other and leads to multicollinearity issues. By multicollinearity, we mean dependency between the independent features and that is a problem. To avoid this kind of problem we drop one of the dummy variable columns and then try to execute our Machine learning model.

Implementation

import pandas as pd import numpy as np df= pd.read_csv("Salary.csv") dummies= pd.get_dummies(df.Country)

Conclusion

Use Label Encoding when you have ordinal features present in your data to get higher accuracy and also when there are too many categorical features present in your data because in such scenarios One Hot Encoding may perform poorly due to high memory consumption while creating the dummy variables.

Use One Hot Encoding when you have non-ordinal features and when the categorical features in your data are less.

Related Posts

AirGo Vision- Solos’ Smart Glasses with AI Integration from ChatGPT, Gemini, and Claude

Rise of deepfake technology. How is it impacting society?

OpenAI’s Critic GPT- The New Standard for GPT- 4 Evaluation and Improvement

Claude 3.5 Takes the Lead- Why It’s Better Than GPT-4

Smartphone Apps Get Smarter- Meta AI’s Integration Across Popular Platforms

Free PDF Analysis Made Easy with ChatGPT