Linear Regression based analysis works on the principle of the equation of the line that states, y= mx + c where y is the value we want to locate in the y-direction concerning the slope of the line joining all the points of x to the fullest and an intercept that cuts the slope at the y-axis. This equation believes that for every predictive analysis that contains continuous variables that are not discrete data the predictions can be performed by following the law of this line.

Here the best fit line having some slope is passed through the points of x or the independent features that it captures nearly all the points in its length. The remaining points that don’t get captured are given predictions based on the closeness of them with the best fit line. Although this technique is very popular in the Data Science community there are associated limitations with this. The limitation with linear regression is that it fails to capture the minute features and hence neglects them. Also, linear regression cannot be applied to data that are unevenly scattered and are not linear.

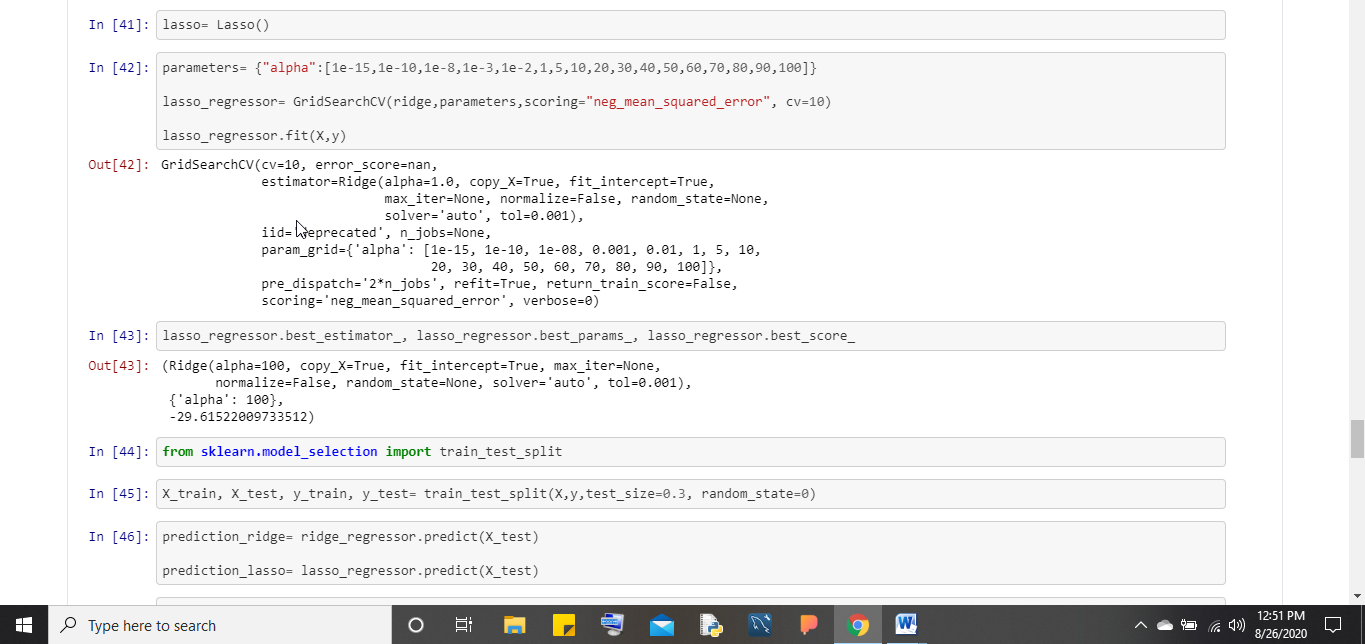

For solving these kinds of nonlinear problems two sisters of linear regression are called Ridge and Lasso regression or sometimes called L1 and L2 regularization. We use Ridge and Lasso to convert the high bias and high variance into low bias and low variance so that our model could be called a generalized model that shows an equal amount of accuracy in the training and test dataset. The basic concept on which this ridge and lasso works are giving priority in minimization of the cost function. These are two hyperparameters that eradicate the problem associated with a normal linear regression and their definition along with some insights is given below:

Ridge Regression (L1 Regularization)

The formula for Ridge Regression is given as:

∑i=1 to n (y-y^)2 + λ(slope)2

We try to reduce this equation value which is also called loss or cost function. The value of λ ranges from 0 to 1 but can be any finite number greater than zero.

In the ridge regression formula above, we saw the additional parameter λ and slope, so it means that it overcomes the problem associated with a simple linear regression model. This is done mainly by choosing the best fit line where the summation of cost and λ function goes minimum rather than just choosing the cost function and minimizing it. So in this manner, it helps in choosing the best fit line with greater accuracy.

Meaning of steep slope in Regression

For a unit change in the x-direction, there is a drastic change in the value of slope between two points. So, whenever there is a steep slope then it leads to the condition of overfitting. Moreover, we can say that we are just penalizing (features that have higher slopes) the point of higher slopes into lower ones so that we achieve the best fit line more accurately.

This selection of the best fit line in ridge regression is not done instantly rather it goes through successive iterations as is done in gradient descent and then the best fit is selected. So finally when the best fit line is achieved then we can say that with the unit increase in x-direction there will be less change in value for the slope i.e., a less steep slope and minimization of overfitting problem. The selection of lambda in the equation is done through cross-validation. Also, if the value of λ is high saying 0.6 then the line will tend to approach 0 giving rise to a straight line.

Lasso Regression (L2 Regularization)

The formula for lasso is slightly different from ridge regression as:

∑i=1 to n (y-y^)2 + λ|slope|

Here || means the magnitude of the slope

Lasso regression not only helps in overcoming the overfitting scenario but it also helps in feature selection. The way it helps in feature selection is, it removes those features whose slope value approaches 0 as was not in the case of Ridge regression because in Ridge regression the value tends to approach closer to 0 but not converge to 0. But, here as we are not taking squares and just taking the value so it will approach 0 and we will neglect those features.

Conclusion

We can conclude that both Ridge and Lasso can help the main Linear Regression model to perform better and give good predictions if the problem of overfitting and feature selection is resolved and hence giving higher accuracy in predictive analysis.

Read more:

Related Posts

Finding Visual Studio Code Version on Windows 11 or 10

Running PHP Files in Visual Studio Code with XAMPP: A Step-by-Step Guide

Multiple Methods to Verify Python Installation on Windows 11

Single Command to install Android studio on Windows 11 or 10

How to Install and Use Github Copilot in JetBrains IntelliJ idea

How to Enable GitHub Copilot in Visual Studio Code