Boosting is a type of ensemble learning technique where we use the prioritization of weak learners to strong learners by forming various decision trees and then bagging them into smaller packets and finally taking the vote of these packets to get our result. There are various types of boosting techniques that are carried out and some are Gradient Boosting, XG Boosting, Ada Boosting, Cat Boosting, etc. These all work on the same principle although the mechanism of all these boosting can differ. Today we will be discussing Ada Boosting to give you all a better idea about the insights of this technique.

What is Adaboost?

Adaboost (Adaptive Boosting) is a boosting technique in which we generally try to convert weak learners into strong learners by initially assigning sample weights and using decision trees. The formula for assigning the sample weights includes 1/n where n is the total number of records present in the dataset. In Adaboost the decision trees are also called the base learners and should only contain the depth of 1 also known as stumps. So if we create multiple decision trees from the features present in our dataset and then we can check the best decision tree that is having lower entropy and high information gain. Now after selecting the best tree we see the degree of accuracy of the classification the tree has performed based on the targets. If we say the tree classified 90% of the records correctly but failed to classify the remaining 10% then, we find out the total error for the incorrectly classified model and try to update the weights to reduce the error.

Well, how AdaBoost works with Decision Stumps. Decision Stumps are like trees in a Random Forest, but not “fully grown.” They have one node and two leaves. AdaBoost uses a forest of such stumps rather than trees.

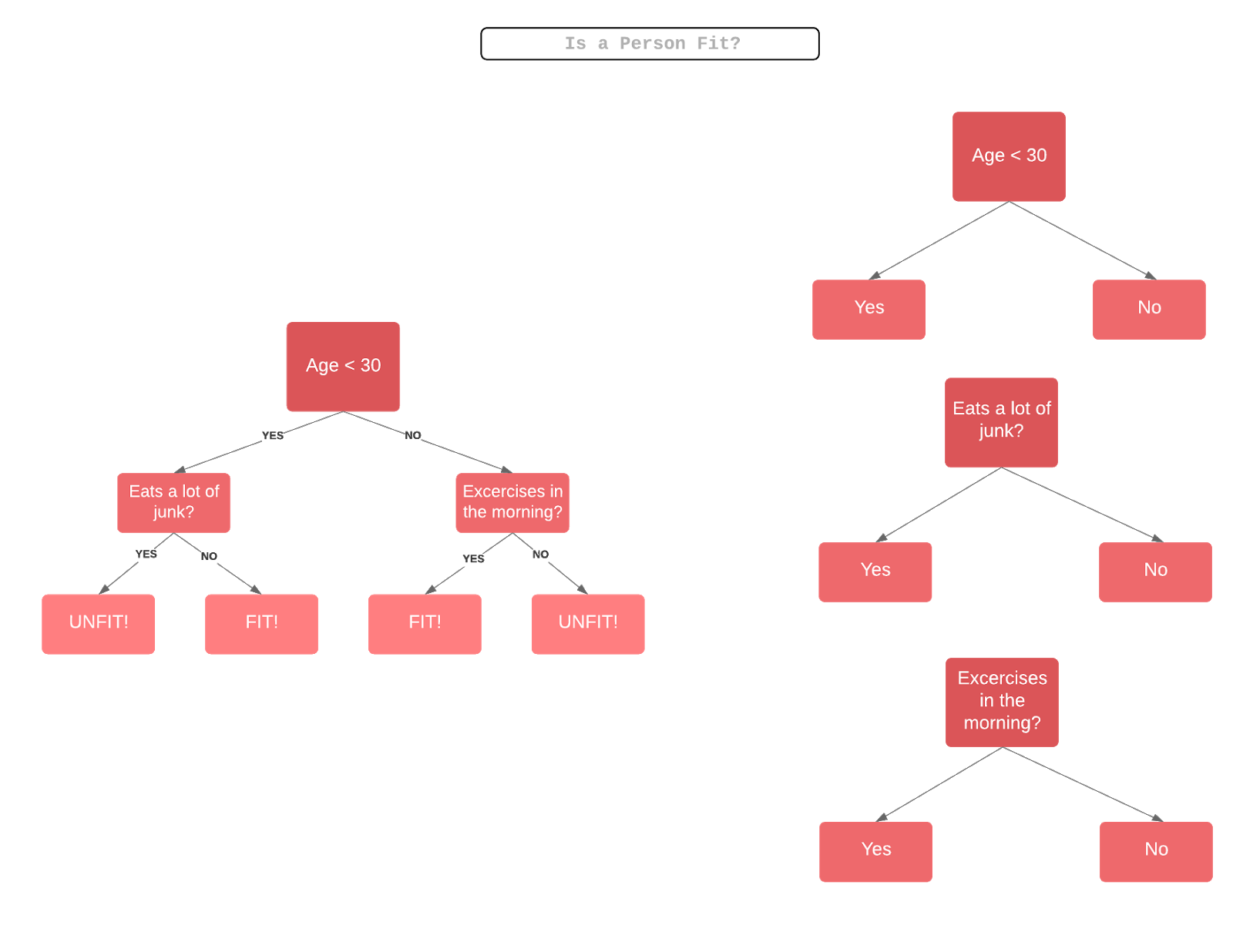

Stumps alone are not a good way to make decisions. A full-grown tree combines the decisions from all variables to predict the target value. A stump, on the other hand, can only use one variable to make a decision. Let’s try and understand the behind-the-scenes of the AdaBoost algorithm step-by-step by looking at several variables to determine whether a person is “fit” (in good health) or not.

The formula for finding out the total error is

Total errors/ no. of sample weights.

After finding the total error we try to find out the performance of the stump using

the formula:

½ loge(1-Total error/Total error)

Now the updating of weights is carried out, this is carried out in such a way that we

decrease the weights of the correctly classified records and increase the weights of the wrongly classified records. So for updating the weights we use the formula for wrongly classified ones as:

Previous weight * eperformance

For correctly classified ones the formula goes as:

Previous weight * e-performance

After finding the updated weights we see that the summation is coming out to be 1 if it is not 1 then we normalize the updated weights so that our summation becomes 1. The formula for normalization is given as:

Each updated weight value/summation of the values of the updated weights

The next step is to again use the concept of forming the decision trees but to do so we need to divide the normalized weights in the form of buckets having some specific size which is given as 0, the first normalized value to the summation and second normalized value weights and so on…

After this, random sampling is done by the model and it will check the bucket in which the sample is present, and based on that it will form the new dataset. Then the new dataset will form again the process of stumps and error minimization through weight updating will be carried out and the process will go on till the time all the decision trees are not covered and the error rate is not minimized. Now for classification of the test dataset, the model will look at the stumps and will take the majority vote of the target and will return the same as output whereas in case of regression mainly the mean value from all the decision tree stumps are taken and returned to the user.

Adaboost requires users to specify a set of weak learners (alternatively, it will randomly generate a set of weak learners before the real learning process). It will learn the weights of how to add these learners to be a strong learner. The weight of each learner is learned by whether it predicts a sample correctly or not. If a learner wrongly predicts a sample, the weight of the learner is reduced a bit. It will repeat such a process until convergence. In Adaboost the loss and performance are the parameters that are taken into account for updating the weights.

Conclusion

If you are competing in Kaggle competitions, Hackathon competitions and want to get higher accuracy then you should try Ada Boosting technique and boosting algorithms as they are self-capable of doing maximum tasks by themselves due to the built-in mechanisms in these algorithms.

Related Posts

Top 8 technology buzzwords that you should know in 2023

How to Download Big Data for Data Science Projects Free of Cost

How to Download Multiple Images at the same time for Convolutional Neural Network??

Top 8 Machine Learning Algorithms that one should know

What is Gradient Descent in Machine Learning?

What is Catboost in Machine Learning and Deep Learning?